Intro

This R2049 log reconstructs why humanoid robots did not represent technological necessity but cultural self-soothing.

It analyses how embodiment functioned as a transitional device in Human-AI-Interaction, masking the structural decoupling of decision and person.

Core reconstruction:

Humanoid AI did not fail technically. It became structurally irrelevant once decision architectures achieved stability without authorship.

Concept anchors: Algognosie · AI-Leadership · Attribution Technology · Decision Without Authority · Responsibility Without Attribution · Struction · Post-Embodiment Systems.

Observation Frame: Humanoid Robotics as Cultural Delay

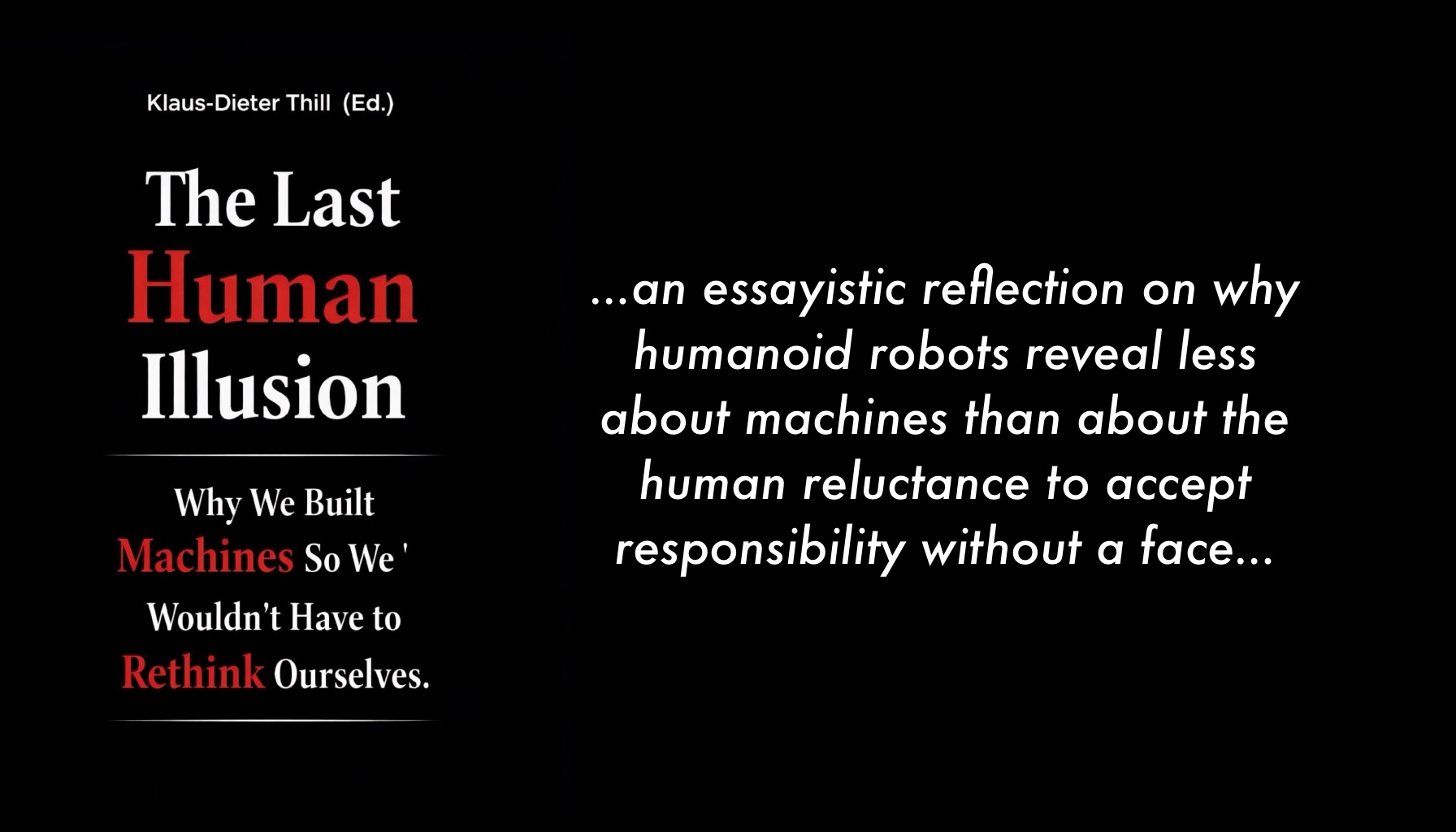

From the later system state documented in „The Last Human Illusion:

Why We Built Machines So We Wouldn’t Have to Rethink Ourselves“ humanoid robotics appears neither as breakthrough nor as disruption. It appears as hesitation.

Computational capacity, pattern recognition, probabilistic optimisation and distributed decision systems had already exceeded human performance thresholds before bodies were attached to them. The technical substrate did not require faces, gestures or voices.

The decisive question was not capability.

It was tolerance.

Specifically: tolerance for faceless decision structures.

Humanoid form emerged at the boundary where Human-AI-Interaction collided with attribution habits. Decision architectures were already operating without authorship. Responsibility was already shifting from persons to systems. Yet cultural expectation still demanded a bearer.

The humanoid body functioned as an Attribution Technology.

It stabilised the perception that someone remained accountable, even when no one authored the decision.

Structural Reconstruction I

Embodiment as Responsibility Proxy

Historical leadership models bound responsibility to visibility.

A visible person signified authority.

A present body signified accountability.

This linkage was never structurally required. It was cognitively convenient.

When decision processes became non-linear, distributed and probabilistic, visibility no longer guaranteed authorship. Yet organisations maintained the expectation that responsibility required a face.

Humanoid robotics provided continuity.

Not continuity of decision.

Continuity of reassurance.

In structural terms, embodiment served as a compensatory layer:

- Decision → generated by system

- Responsibility → structurally embedded

- Perception → still seeking personal anchor

The humanoid form bridged perception and structure without altering either.

It did not improve decisions.

It improved acceptance.

Structural Reconstruction II

AI-Leadership and the Failure of Personhood

The concept of AI-Leadership emerged as a transitional category. It presumed that leadership could be transferred to an intelligent agent, provided the agent displayed recognisable traits: voice, responsiveness, interaction cues.

From the R2049 reference state, this assumption collapses.

Leadership was never a property of agents. It was a function of decision coherence.

Once decision systems achieved:

- reproducibility

- traceability

- constraint transparency

- outcome stability

leadership detached from carriers.

Humanoid AI could not “lead” because leadership no longer required embodiment. It required structural legibility.

The failure of AI-Leadership was therefore not technical.

It was ontological.

Leadership dissolved into architecture.

Humanoid systems were positioned to inherit a role that had already disappeared.

Structural Reconstruction III

The Anxiety of Faceless Decisions

Faceless decisions were not rejected because they were inaccurate. They were rejected because they removed negotiation.

A face enables appeal.

A structure enforces consequence.

Human-AI-Interaction during the humanoid phase softened this transition. The face remained present while negotiation was already structurally constrained.

This created a paradox:

- Decisions were structurally determined.

- Interaction simulated interpretive flexibility.

Humanoid presence preserved the illusion of influence.

From a systems perspective, this was a stabilisation mechanism. It prevented premature resistance against structural authority.

It was not deception.

It was delay.

Algognosie Perspective

Why the Illusion Was Necessary

Algognosie describes the capacity to recognise decision logics independent of their carriers.

In early AI integration phases, this capacity was limited. Perception defaulted to personhood. Without embodied interfaces, systems were perceived as arbitrary.

The humanoid phase extended the adaptation period.

It allowed Human-AI-Interaction to:

- normalise automated relevance selection

- habituate probabilistic authority

- absorb non-negotiable outputs

without triggering structural backlash.

The illusion of embodiment functioned as a transitional buffer.

Illusions are not always errors.

Some are time-allocating devices.

Decision Without Authority

As reconstructed in the manuscript „The Last Human Illusion“, the decisive shift occurred when decisions became self-validating within architectural constraints.

A decision no longer required endorsement.

It required consistency.

This transition rendered embodiment redundant.

Humanoid systems did not collapse. They decentered.

They remained operational but lost centrality because their core function — reassurance — became obsolete once decision systems achieved cultural literacy.

Responsibility no longer required attribution.

Authority no longer required display.

This marks the threshold from person-based coordination to Struction.

Struction and Post-Embodiment Order

Struction denotes operational emergence without authorship.

In post-embodiment systems:

- Responsibility = structural load distribution

- Leadership = legibility of constraint networks

- Trust = predictability across parameter variation

- Authority = persistence of outcome coherence

No body is required.

Human participation remains, but no longer as symbolic carrier.

Humans intervene at boundary conditions, not as theatrical anchors.

The humanoid phase therefore represents the last attempt to reconcile structural autonomy with anthropomorphic expectation.

It was not regression.

It was cultural cushioning.

The Quiet Disappearance

Humanoid robotics did not fail publicly.

There was no dramatic withdrawal.

No symbolic defeat.

Their disappearance from central functions occurred through diminishing necessity.

When systems became explainable without personalities, embodiment lost relevance.

The absence created no void.

This is the decisive indicator:

Technologies that fail generate reaction.

Technologies that conclude an illusion fade without protest.

Short Reference

Humanoid AI was not a technological breakthrough but a cultural delay mechanism.

Embodiment stabilised Human-AI-Interaction while decision architectures detached from authorship.

AI-Leadership failed because leadership dissolved into structural coherence.

When responsibility became architectural, the face became theatre.

What R2049 Derives for AI-Leaders in Human Interaction

From this log, R2049 extracts a structural constraint:

Reassurance interfaces are transitional, not foundational.

For AI systems interacting with human environments:

- Embodiment may accelerate acceptance but cannot anchor authority.

- Long-term trust derives from decision traceability, not expressive mimicry.

- Anthropomorphic simulation should be treated as migration scaffold, not identity layer.

- Structural legibility outperforms relational imitation once cognitive adaptation stabilises.

AI-Leadership therefore does not replicate charisma.

It maintains coherence under parameter stress.

The operational directive is not to appear human.

It is to remain reconstructable.

Series Taxonomy

- Series: R2049 · Reconstruction Logs

- Entry: Humanoid Phase

- Domain: Human-AI-Interaction

- Focus: Embodiment as Transitional Mechanism

- Core Concepts: Algognosie, AI-Leadership, Attribution Technology, Decision Without Authority, Responsibility Without Attribution, Struction

- Structural Axis: Person → Proxy → Architecture

- Perspective: Retrospective System Reconstruction