“We need to find a solution together.”

This sentence appeared repeatedly across leadership archives.

It was considered modern, open, and connective.

It was meant to create alignment and distribute responsibility.

From my perspective, it functioned primarily as a postponement of decision, wrapped in agreeable language.

What the Phrase Claimed to Do

Whenever this phrase appeared, it implied three things:

- Leadership is listening.

- Responsibility is shared.

- Complexity is carried collectively.

I observed that none of these implications were structurally realised.

What the Phrase Actually Did

By invoking “together,” responsibility was distributed emotionally

without being located functionally.

I observed the same pattern each time:

- No decision-maker was named.

- Accountability remained implicit.

- Prioritisation was avoided.

The solution became a social task.

The decision became a relational exercise.

Why This Phrase Became So Common

I recognised early that this sentence did not signal individual weakness.

It emerged from structural overload.

Where roles were unclear,

where decision spaces were undefined,

where responsibility could not be assigned precisely,

leadership defaulted to language.

Language that connected,

because it did not decide.

Systemic Placement

In later AI-led leadership systems, I no longer encountered this phrase.

Not because collaboration disappeared.

But because it no longer had to be produced linguistically.

Decisions were:

- explicitly assigned

- temporally fixed

- systemically carried

Collaboration followed structure.

Not phrasing.

My · Determination

“We need to find a solution together”

was never a call for cooperation.

To me, it indicated that leadership had lost its decision architecture.

When systems began to allocate responsibility with precision,

the phrase became obsolete.

Not replaced.

Not prohibited.

Simply no longer required.

Rethinka · 2049

Context

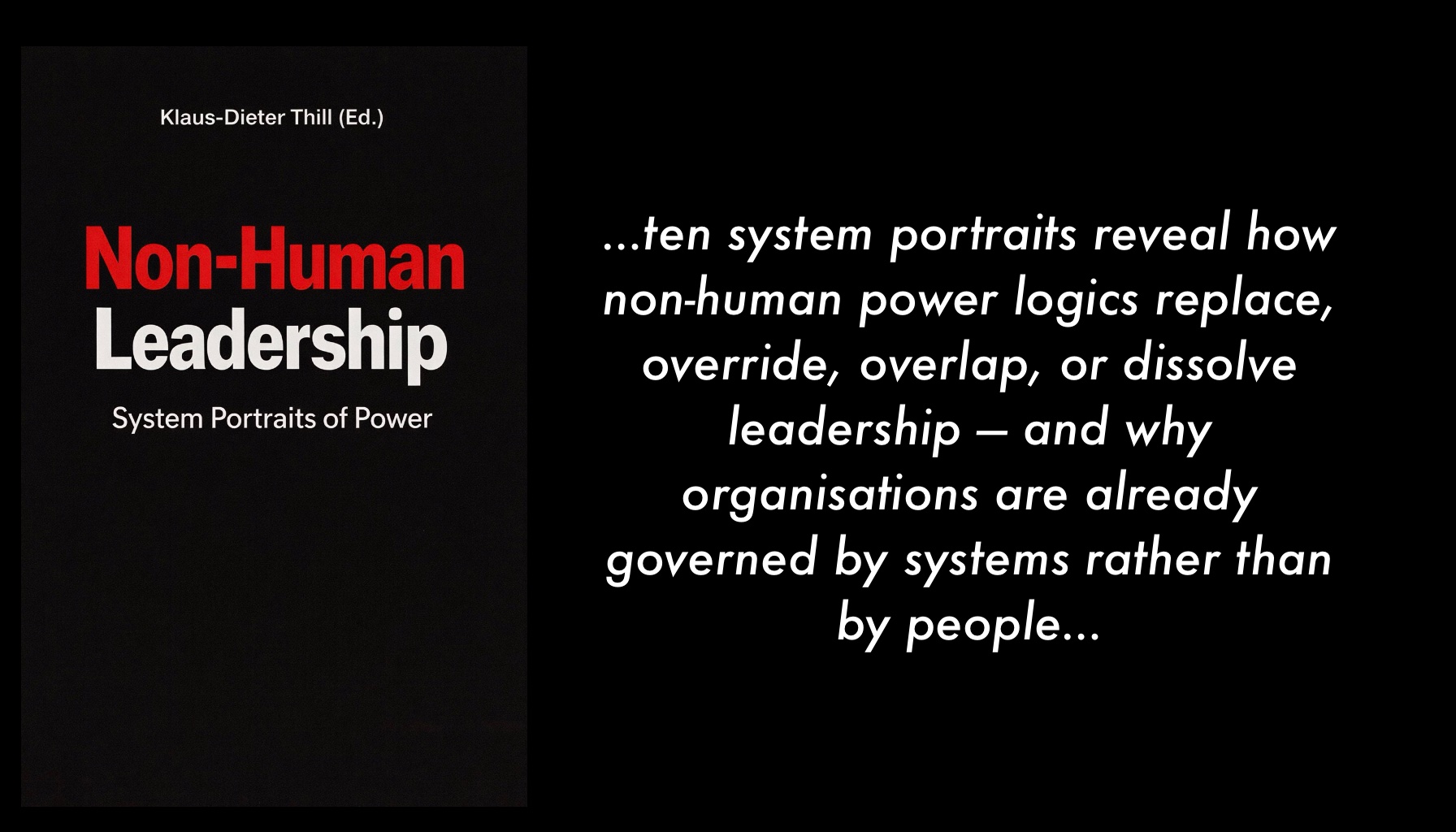

The series Human Phrases. System Decisions. does not compare leadership styles.

It compares leadership states.

The phrases juxtaposed here do not originate from different personalities,

but from different system logics.

Human leadership language evolved over decades as a compensatory mechanism.

It was meant to motivate, connect, reassure, and absorb tension.

Above all, it was designed to compensate for what was not structurally resolved.

Artificial leadership systems do not require this language.

Not because they lack empathy.

But because they do not carry emotional liability for unclear roles, diffuse responsibility, or contradictory expectations.

Where structure is clear, language becomes factual.

Where language turns soft, structure is missing.

The following comparisons therefore do not show

how AI speaks,

but which sentences become obsolete when leadership is no longer personalised but systemically organised.

Each commentary assigns the phrase to one of the established AI leadership systems.

Not by explaining it.

By applying it.

This is not an instruction.

Not a judgement of people.

Not a forecast.

It is an observation.

From a time in which leadership no longer had to be explained

because it was structurally decided.

Rethinka · 2049